Visualizations

The GVIS Lab's visualizations allow people to interact with science and environments in an interactive and intuitive way.

The GVIS Lab continually works on a variety of data visualization and interactive technology projects ranging from data analysis to Martian surface simulations.

Explore some of the Lab’s visualizations below.

10×10 Supersonic Wind Tunnel

This visualization allows users to delve into the point cloud displays of the 10×10 Supersonic Wind Tunnel in Building 86, enriched by the Cave Automatic Virtual Environment (CAVE) projection that provides a unified 3D viewing platform. Located at NASA Glenn, the 10×10 is a paramount facility dedicated to examining an array of supersonic propulsion elements. At the GVIS lab, our objective is not just to grant users a glimpse of areas typically out of reach, whether for enlightening tours or rigorous research, but also to harness point cloud scanning’s capability to archive spaces, preserving them as if frozen in time. This ensures their continued availability for reference, irrespective of future changes to the actual sites.

This visualization allows users to delve into the point cloud displays of the 10×10 Supersonic Wind Tunnel in Building 86, enriched by the Cave Automatic Virtual Environment (CAVE) projection that provides a unified 3D viewing platform. Located at NASA Glenn, the 10×10 is a paramount facility dedicated to examining an array of supersonic propulsion elements. At the GVIS lab, our objective is not just to grant users a glimpse of areas typically out of reach, whether for enlightening tours or rigorous research, but also to harness point cloud scanning’s capability to archive spaces, preserving them as if frozen in time. This ensures their continued availability for reference, irrespective of future changes to the actual sites.

Subsonic Single Aft Engine (SUSAN)

The SUSAN visualization, short for Subsonic Single Aft Engine, is a cutting-edge concept for a hybrid electric commercial jet aircraft. This innovative design incorporates a single aft jet engine,complemented by sixteen electric engines distributed among the two wings in a mailbox configuration. This experimental aircraft boasts NASA-designed electronic flight displays, which enhance its navigation and control capabilities. The aircraft features a distinctive T-tail configuration, which contributes to its aerodynamic efficiency. The primary objective of SUSAN is to significantly reduce fuel consumption during flight, aligning with NASA’s mission to promote more sustainable and eco-friendly aviation technologies. To learn more about SUSAN, click here!

The SUSAN visualization, short for Subsonic Single Aft Engine, is a cutting-edge concept for a hybrid electric commercial jet aircraft. This innovative design incorporates a single aft jet engine,complemented by sixteen electric engines distributed among the two wings in a mailbox configuration. This experimental aircraft boasts NASA-designed electronic flight displays, which enhance its navigation and control capabilities. The aircraft features a distinctive T-tail configuration, which contributes to its aerodynamic efficiency. The primary objective of SUSAN is to significantly reduce fuel consumption during flight, aligning with NASA’s mission to promote more sustainable and eco-friendly aviation technologies. To learn more about SUSAN, click here!

W8 Facility Digital Twin

The W8 Facility Digital Twin was created to prototype a digital twin of the W8 Facility within the realm of virtual reality. This involved meticulously recreating the facility and its intricate hardware, using existing CAD models as the foundation. The culmination of this effort allows users to immerse themselves in the virtual environment and navigate through the facility. The virtual model boasts an augmented aspect: key pieces of geometry and hardware are adorned with informative call-outs, showcasing a range of data including temperature and pressure. These data points are derived from the actual tests, lending authenticity to the virtual experience. Through this, the primary objective was to unravel the intricate process of developing a virtual twin in the VR realm, while simultaneously pinpointing the array of challenges that lie within this innovative pursuit.

The W8 Facility Digital Twin was created to prototype a digital twin of the W8 Facility within the realm of virtual reality. This involved meticulously recreating the facility and its intricate hardware, using existing CAD models as the foundation. The culmination of this effort allows users to immerse themselves in the virtual environment and navigate through the facility. The virtual model boasts an augmented aspect: key pieces of geometry and hardware are adorned with informative call-outs, showcasing a range of data including temperature and pressure. These data points are derived from the actual tests, lending authenticity to the virtual experience. Through this, the primary objective was to unravel the intricate process of developing a virtual twin in the VR realm, while simultaneously pinpointing the array of challenges that lie within this innovative pursuit.

Lunar Communications Architecture for Future Artemis Missions

At NASA Glenn, an emulation environment within the MATRICS system is utilized to test cutting-edge technologies developed during the Lunar LTE Studies Project, or LunarLiTES. LunarLiTES aims to assess the applicability of 4G and 5G technologies in the lunar environment, with the ultimate goal of providing future lunar explorers with the same level of seamless communication they enjoy on Earth.

At NASA Glenn, an emulation environment within the MATRICS system is utilized to test cutting-edge technologies developed during the Lunar LTE Studies Project, or LunarLiTES. LunarLiTES aims to assess the applicability of 4G and 5G technologies in the lunar environment, with the ultimate goal of providing future lunar explorers with the same level of seamless communication they enjoy on Earth.

The LunarLiTES team is dedicated to enhancing the Multiple Asset Tested for Research of Innovative Communication Systems (MATRICS) emulation environment by introducing 4G and 5G capabilities. The specific objective is to analyze the performance of 4G and 5G communication systems on the lunar surface.

The lunar communications architecture animation not only showcases the Lunar LTE Studies project but also highlights the pivotal role played by SCaN’s team at NASA Glenn. The primary intention with this visualization is to educate and engage the public, shedding light on SCaN’s mission and its significant contributions within the NASA agency.

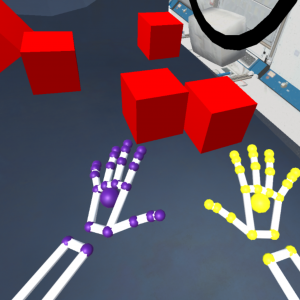

HEMM AR Application

Developed within the realms of Unity 3D in C# and optimized for the Oculus Quest 2, this app is a gateway to augmented reality. By utilizing the device’s external cameras, it seamlessly blends the virtual and real worlds. With intuitive hand tracing and wireless freedom, even beginners can become proficient within minutes. The app’s mission is to democratize augmented reality, allowing users to experience 3D models and data visualizations affordably. This accessibility paves the way for widespread integration in education, capitalizing on the power of visual learning without being expensive.

Developed within the realms of Unity 3D in C# and optimized for the Oculus Quest 2, this app is a gateway to augmented reality. By utilizing the device’s external cameras, it seamlessly blends the virtual and real worlds. With intuitive hand tracing and wireless freedom, even beginners can become proficient within minutes. The app’s mission is to democratize augmented reality, allowing users to experience 3D models and data visualizations affordably. This accessibility paves the way for widespread integration in education, capitalizing on the power of visual learning without being expensive.

W-1A Small Core Research

W-1A is NASA Glenn’s Low Speed Axial Compressor facility. This virtual reality experience allows the user to take an immersive and interactive tour of the facility as it is being used for recent work on jet engine casing treatments. Using a VR headset or the Cave Automated Virtual-Reality Environment (CAVE), the user can explore the facility’s environment, examine the engine and pick up objects to better inspect them in a seemingly realistic way. A video featuring the W-1A visualization can be seen on our YouTube channel.

W-1A is NASA Glenn’s Low Speed Axial Compressor facility. This virtual reality experience allows the user to take an immersive and interactive tour of the facility as it is being used for recent work on jet engine casing treatments. Using a VR headset or the Cave Automated Virtual-Reality Environment (CAVE), the user can explore the facility’s environment, examine the engine and pick up objects to better inspect them in a seemingly realistic way. A video featuring the W-1A visualization can be seen on our YouTube channel.

GRC Wind Tunnel Lobby (Building 54) Virtual Tour

The Building 54 virtual tour provides users with a detailed look inside the lobby leading to Glenn’s 9- by 15-Foot Low-Speed Wind Tunnel and 8- by 6-foot Supersonic Wind Tunnel. A virtual tour guide offers information on the facility and research as the user explores the space. The application was developed for use on both virtual reality headsets and in non-VR via the web and provides a window into the work being done at Glenn without needing physical access to the facilities. You can take the tour yourself by clicking here!

The Building 54 virtual tour provides users with a detailed look inside the lobby leading to Glenn’s 9- by 15-Foot Low-Speed Wind Tunnel and 8- by 6-foot Supersonic Wind Tunnel. A virtual tour guide offers information on the facility and research as the user explores the space. The application was developed for use on both virtual reality headsets and in non-VR via the web and provides a window into the work being done at Glenn without needing physical access to the facilities. You can take the tour yourself by clicking here!

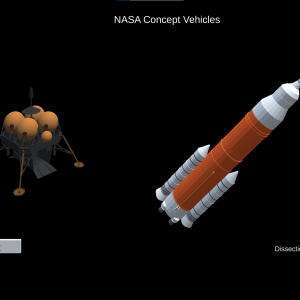

NASA Concept Vehicles

The NASA Concept Vehicles visualization allows users to manipulate, dissect, and learn about experimental vehicles at NASA, designed to be used with zSpace. ZSpace is a 3D augmented reality computer operating on Windows OS along with a stylus and glasses that extend typical three-dimensional viewing by allowing the user to pull content out of the screen. This visualization consists of a central hub in which users can view all models together along with options to focus on only one vehicle. The parts of each vehicle are labelled (where information is available) so that when the users dissect the model, the app displays information on the specific part they are holding.

The NASA Concept Vehicles visualization allows users to manipulate, dissect, and learn about experimental vehicles at NASA, designed to be used with zSpace. ZSpace is a 3D augmented reality computer operating on Windows OS along with a stylus and glasses that extend typical three-dimensional viewing by allowing the user to pull content out of the screen. This visualization consists of a central hub in which users can view all models together along with options to focus on only one vehicle. The parts of each vehicle are labelled (where information is available) so that when the users dissect the model, the app displays information on the specific part they are holding.

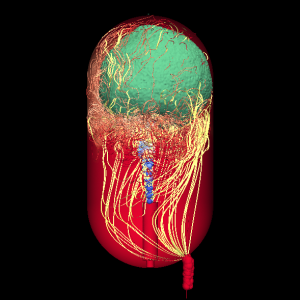

ZBOT

NASA is working to improve storage of propellants in space. One of the most efficient propellants, liquid hydrogen, cannot be stored long-term in space—it must be kept very cold (20K) or it will boil off. This visualization shows a computer simulation of a liquid hydrogen propellant tank with an internal “jet mixer” to help equalize the internal fluid temperatures. It’ll be used to help analyze the effectiveness of this method with the aim of being able to store liquid hydrogen more efficiently for space missions.

NASA is working to improve storage of propellants in space. One of the most efficient propellants, liquid hydrogen, cannot be stored long-term in space—it must be kept very cold (20K) or it will boil off. This visualization shows a computer simulation of a liquid hydrogen propellant tank with an internal “jet mixer” to help equalize the internal fluid temperatures. It’ll be used to help analyze the effectiveness of this method with the aim of being able to store liquid hydrogen more efficiently for space missions.

X-57 Looking Glass Visualization

The X-57 visualization displays NASA’s concept electric aircraft, the X-57, using the Looking Glass. The Looking Glass is a 2 inch thick display which utilizes polarized glass to render a 3D model in a hologram-like manner, helping the user to see depth in a 3D visualization which might otherwise look 2-dimensional. The X-57 is powered by 12 high lift motors and 2 large motors, all of which are electric. This visualization contains an animation of the X-57 flying as well as a separate animation a cross section of one of the high lift motors which shows the motor’s thermal loads during operation. Using the Looking Glass allows these visualizations to be viewed more in depth rather than just as a 2D animation.

The X-57 visualization displays NASA’s concept electric aircraft, the X-57, using the Looking Glass. The Looking Glass is a 2 inch thick display which utilizes polarized glass to render a 3D model in a hologram-like manner, helping the user to see depth in a 3D visualization which might otherwise look 2-dimensional. The X-57 is powered by 12 high lift motors and 2 large motors, all of which are electric. This visualization contains an animation of the X-57 flying as well as a separate animation a cross section of one of the high lift motors which shows the motor’s thermal loads during operation. Using the Looking Glass allows these visualizations to be viewed more in depth rather than just as a 2D animation.

International Space Station Visualization

The International Space Station (ISS) visualization allows the user to virtually explore a model of the ISS. This is accomplished through a combination of technologies including Leap Motion, Xbox and virtual reality. With these technologies, the user is able to navigate the ISS environment as if they were in space. The visualization also includes a game in which the user interacts with and stacks 3D blocks inside the ISS’s Microgravity Science Glovebox (MSG). The Leap Motion infrared sensor on the front of the VR Oculus headset tracks the person’s hand down to the individual fingers allowing them to move and stack the 3D blocks without the aid of a controller. This visualization was created by a GVIS Lab intern over a period of two internship sessions and is used to help demonstrate what is possible with experimental VR technology. Click here to learn more!

The International Space Station (ISS) visualization allows the user to virtually explore a model of the ISS. This is accomplished through a combination of technologies including Leap Motion, Xbox and virtual reality. With these technologies, the user is able to navigate the ISS environment as if they were in space. The visualization also includes a game in which the user interacts with and stacks 3D blocks inside the ISS’s Microgravity Science Glovebox (MSG). The Leap Motion infrared sensor on the front of the VR Oculus headset tracks the person’s hand down to the individual fingers allowing them to move and stack the 3D blocks without the aid of a controller. This visualization was created by a GVIS Lab intern over a period of two internship sessions and is used to help demonstrate what is possible with experimental VR technology. Click here to learn more!

Urban Air Mobility Project

The Urban Air Mobility (UAM) task within the Unmanned Aircraft Systems in the National Airspace Command and Control Subproject attempts to identify the communications challenges associated with UAM operations. At NASA Glenn, the UAM team has developed a concept of operations for command and control of UAM, which the GVIS Lab has modeled as a 3D simulation that can be run in various VR environments. The UAM visualization is currently run in the GRUVE Lab’s Cave Automatic Virtual-Reality Environment (CAVE)—a three-walled virtual reality space. Here, users wear stereo shutter glasses and walk around the CAVE to observe full-scale models of concept drones up close and watch them fly in a virtual airport. Viewing the UAM in this way helps researchers identify design challenges that are not easily determined by other methods. Additionally, this simulation in the CAVE can be viewed by individuals or large groups making it an accessible and immersive experience.

The Urban Air Mobility (UAM) task within the Unmanned Aircraft Systems in the National Airspace Command and Control Subproject attempts to identify the communications challenges associated with UAM operations. At NASA Glenn, the UAM team has developed a concept of operations for command and control of UAM, which the GVIS Lab has modeled as a 3D simulation that can be run in various VR environments. The UAM visualization is currently run in the GRUVE Lab’s Cave Automatic Virtual-Reality Environment (CAVE)—a three-walled virtual reality space. Here, users wear stereo shutter glasses and walk around the CAVE to observe full-scale models of concept drones up close and watch them fly in a virtual airport. Viewing the UAM in this way helps researchers identify design challenges that are not easily determined by other methods. Additionally, this simulation in the CAVE can be viewed by individuals or large groups making it an accessible and immersive experience.

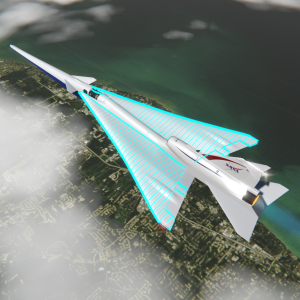

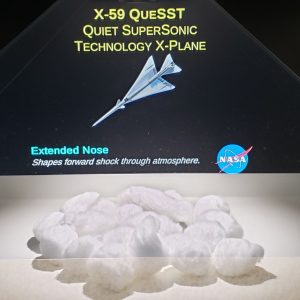

X-59 Quesst – Quiet Supersonic Mission

Can we develop a quieter supersonic aircraft? This is the question that NASA’s X-59 Quesst mission and the GVIS Lab’s Quesst visualization explore. Commercial planes aren’t allowed to fly faster than the speed of sound over land because they would create sonic booms which are very loud and disruptive to humans and animals. The X-59 is an experimental aircraft that could lead to future supersonic commercial travel over land, drastically cutting flight times and saving people time in the air. This interactive virtual reality visualization drops the user into NASA Glenn’s 8×6 wind tunnel where they can explore the flight test model and data from a wind tunnel test. Next, they are teleported to 50,000 feet in the air to explore the design features of the aircraft as it cruises at Mach 1.4. Inside the cockpit, an audio demonstration compares the difference between a traditional sonic boom and Quesst’s quieter supersonic thump. A non-virtual reality version of the visualization makes use of a commercial flight simulator chair and motion platform that engages users by providing them a sense of movement as they virtually explore the aircraft in flight. This simulation gives NASA a portable and interactive tool to show researchers, partners, government officials, and the public the capabilities of Glenn’s facilities and the goals of the X-59 Quesst mission in an engaging and accessible way

Can we develop a quieter supersonic aircraft? This is the question that NASA’s X-59 Quesst mission and the GVIS Lab’s Quesst visualization explore. Commercial planes aren’t allowed to fly faster than the speed of sound over land because they would create sonic booms which are very loud and disruptive to humans and animals. The X-59 is an experimental aircraft that could lead to future supersonic commercial travel over land, drastically cutting flight times and saving people time in the air. This interactive virtual reality visualization drops the user into NASA Glenn’s 8×6 wind tunnel where they can explore the flight test model and data from a wind tunnel test. Next, they are teleported to 50,000 feet in the air to explore the design features of the aircraft as it cruises at Mach 1.4. Inside the cockpit, an audio demonstration compares the difference between a traditional sonic boom and Quesst’s quieter supersonic thump. A non-virtual reality version of the visualization makes use of a commercial flight simulator chair and motion platform that engages users by providing them a sense of movement as they virtually explore the aircraft in flight. This simulation gives NASA a portable and interactive tool to show researchers, partners, government officials, and the public the capabilities of Glenn’s facilities and the goals of the X-59 Quesst mission in an engaging and accessible way

Interactive Holographic Aerospace Models

NASA has a large collection of 3D models of aircraft, space vehicles, satellites and more. The 3D model viewer created by the GVIS Lab uses a hologram display, Leap Motion, and touch screen technology to provide a simple interactive viewer to examine a wide variety of these 3D models. The user navigates through the list of models and chooses one to display. The Leap Motion sensor can then be used to move and spin the model to further examine it.

NASA has a large collection of 3D models of aircraft, space vehicles, satellites and more. The 3D model viewer created by the GVIS Lab uses a hologram display, Leap Motion, and touch screen technology to provide a simple interactive viewer to examine a wide variety of these 3D models. The user navigates through the list of models and chooses one to display. The Leap Motion sensor can then be used to move and spin the model to further examine it.

Boundary Layer Ingestion Testing

One of the ways to improve aircraft efficiency is to reduce the drag on the aircraft using the aircraft’s own engines. Engineers at Glenn are testing a new kind of propulsion system using a principle called Boundary Layer Ingestion (BLI). Analytical studies have shown that this new technology has the potential to reduce the aircraft fuel burn by as much as 8.5% compared to aircraft flown today. This interactive virtual reality visualization demonstrates the testing conditions, tunnel modifications, design elements and challenges of the BLI test performed in Glenn’s 8×6 Supersonic Wind Tunnel. The user controls a few simple facility parameters to visually demonstrate their effects on the BLI condition and fan engagement. Click here to learn more!

One of the ways to improve aircraft efficiency is to reduce the drag on the aircraft using the aircraft’s own engines. Engineers at Glenn are testing a new kind of propulsion system using a principle called Boundary Layer Ingestion (BLI). Analytical studies have shown that this new technology has the potential to reduce the aircraft fuel burn by as much as 8.5% compared to aircraft flown today. This interactive virtual reality visualization demonstrates the testing conditions, tunnel modifications, design elements and challenges of the BLI test performed in Glenn’s 8×6 Supersonic Wind Tunnel. The user controls a few simple facility parameters to visually demonstrate their effects on the BLI condition and fan engagement. Click here to learn more!

HoloAero–NASA Concept Vehicles

The HoloAero application is a HoloLens app that lets the user see three NASA concept aircraft in the context of their current environment. For example, the user can display an aircraft model in the room they are in making it appear as if the aircraft is floating in that room. The users can walk around the aircraft, scale it, spin it, and have it fly, all with voice commands. There are three models shown by the application:

The HoloAero application is a HoloLens app that lets the user see three NASA concept aircraft in the context of their current environment. For example, the user can display an aircraft model in the room they are in making it appear as if the aircraft is floating in that room. The users can walk around the aircraft, scale it, spin it, and have it fly, all with voice commands. There are three models shown by the application:

- Single-aisle Turboelectric Aircraft with an Aft Boundary-layer Propulsor (STARC-ABL). An aircraft design developed by NASA, the design places the engine in the back of the aircraft and is specifically designed to be more fuel efficient than aircraft designs of the past.

- N3-X Hybrid Wing Body

- Supersonic Transport

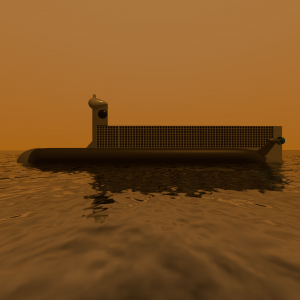

COMPASS Lab Titan Submarine Concept

The Titan Submarine visualization is a virtual reality experience that allows users to explore a proposed mission concept vehicle as it floats on the surface of Kraken Mare, a methane ocean on one of Saturn’s moons, Titan. While on the surface, one can hear the Titan wind, recorded from the European Space Agency’s Huygens space probe (one of the few sound recordings taken from another planetary body). Users can then take the submarine under the surface of the ocean and navigate down to the sea floor. The GVIS Lab created this application in partnership with Glenn’s Collaborative Modeling and Parametric Assessment of Space Systems (COMPASS) Lab to visualize what exploring Saturn’s oceans might entail. In the end, another concept was chosen to move forward, but the GVIS Lab still uses the application as an educational tool to discuss the capabilities of the lab and planetary exploration.

The Titan Submarine visualization is a virtual reality experience that allows users to explore a proposed mission concept vehicle as it floats on the surface of Kraken Mare, a methane ocean on one of Saturn’s moons, Titan. While on the surface, one can hear the Titan wind, recorded from the European Space Agency’s Huygens space probe (one of the few sound recordings taken from another planetary body). Users can then take the submarine under the surface of the ocean and navigate down to the sea floor. The GVIS Lab created this application in partnership with Glenn’s Collaborative Modeling and Parametric Assessment of Space Systems (COMPASS) Lab to visualize what exploring Saturn’s oceans might entail. In the end, another concept was chosen to move forward, but the GVIS Lab still uses the application as an educational tool to discuss the capabilities of the lab and planetary exploration.

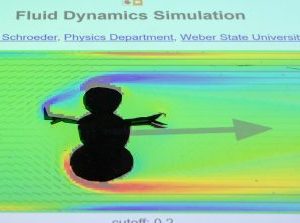

Augmented Reality Fluid Flow Table

The Augmented Reality Fluid Flow Table lets users put physical objects onto a table and have a computer predict the flow past the object in real time. The colors represent the rotation of the flow with green being laminar flow from left to right, blue being clockwise rotation and red indicating counter-clockwise rotation. The arrow shows the force vector on the object due to the flow. The table is mostly used for educational purposes and discovery of principles of fluid flow but similar technology has been used by researchers, engineers and doctors to explain everything from airfoils, vascular systems, wind tunnels and kayak oars.

The Augmented Reality Fluid Flow Table lets users put physical objects onto a table and have a computer predict the flow past the object in real time. The colors represent the rotation of the flow with green being laminar flow from left to right, blue being clockwise rotation and red indicating counter-clockwise rotation. The arrow shows the force vector on the object due to the flow. The table is mostly used for educational purposes and discovery of principles of fluid flow but similar technology has been used by researchers, engineers and doctors to explain everything from airfoils, vascular systems, wind tunnels and kayak oars.

Spacesuit Fitting Room

Become an astronaut on the screen and swipe through the various spacesuits that are used depending on what the astronauts are doing. This interactive, augmented virtual reality experience puts the user into the virtual world on the Moon. You can also plant the U.S. Flag on the Moon while there. Especially unique to this one is that it was developed by a high school student who is currently interning with NASA’s Graphics and Visualization Lab (GVIS).

NASA’s Work on the Moon Animation

The “NASA’s work on the Moon” animation is a visualization designed to be played alongside the astronaut fitting room visualization. The animation joins an astronaut on the moon who tells the viewer about the upcoming Artemis mission, lunar dust, and golfing!

Visualization Archive

Had fun reading and learning about our visualizations? Click here to learn even more of our projects and visit the Visualization Archive!

Contact Us!

Need to reach us? You can directly send an email to the GVIS Team GRC-DL-GVIS@mail.nasa.gov or the Team Leader, Herb Schilling hschilling@nasa.gov Like our content? Follow us on social media @nasa_gvis on Twitter and Instagram!